In this article, we're going to discuss Stable Diffusion and how to use it to generate hyper-realistic photographs with AI and have our own models.

Table of Contents

Introduction

I’m not sure if you’ve read any news about Artificial Intelligence recently.

Specifically one stating: “Model generated by Artificial Intelligence earns $11,000 a month on Onlyfans.”

It turns out that a Spanish Marketing agency got tired of working hands-on with influencers, one thing led to another and they ended up having their influencer create adult content.

Many people have been incredulous: It’s impossible for an AI to replace an influencer.

Well, whether an influencer or anything else, nobody likes to admit that a machine trained for a specific task is better than a human.

Let me introduce you to Aitana, the famous influencer created by Artificial Intelligence.

Whether we like it or not, AI is already here, and it has always been present in one form or another.

Many concepts of artificial intelligence, such as rule-based systems, have been theorized since the 1960s and put into commercial practice in the 1990s.

Ten years ago, you could also use Artificial Intelligence models, but they were more rudimentary and focused on knowledge acquisition and patterns.

But the truth is that in the last year, these models have been refined, and the technical leap has been immense.

In this article, we are going to address from scratch image generators and discover how they work.

AI 2.0 – What is it?

Personally, we can distinguish the two types of accessible AI or Machine Learning models in the following definition:

AI 1.0: Classical models, designed to create rules and learn from a specific dataset.

AI 2.0: Generative models, learn from data to create something new.

AI 1.0, as I like to call it, has been the beginning of publicly available learning algorithms: K-Means, Naïve Bayes, Decision Trees.

All these models were based on providing a dataset and getting a response, and today they are still used.

Online payment companies still rely on conventional models to detect credit card fraud.

I still use them to understand my passions from a rational point of view.

AI 2.0 doesn’t provide answers, it provides content.

The main difference is that before you provided data and AI helped you understand it, now you provide data and it generates new data for you.

Since 2020, there has been a qualitative leap in the availability (and quality) of Artificial Intelligence models for personal use.

These technologies have also been around for many years, but we have never before had a moderately realistic generation capability.

You might be familiar with ChatGPT, a text assistant useful for any situation.

It’s useful for learning, translation, analysis, programming, synthesizing online resources, and much more.

It’s perhaps the most popular example of this category.

Stable Diffusion

Stable Diffusion is a generative Artificial Intelligence model. By providing a dataset and a description of what we want to obtain, we can generate one or more images.

This type of Artificial Intelligence recognizes patterns in the data and seeks to replicate them. It typically relies on complex neural network models.

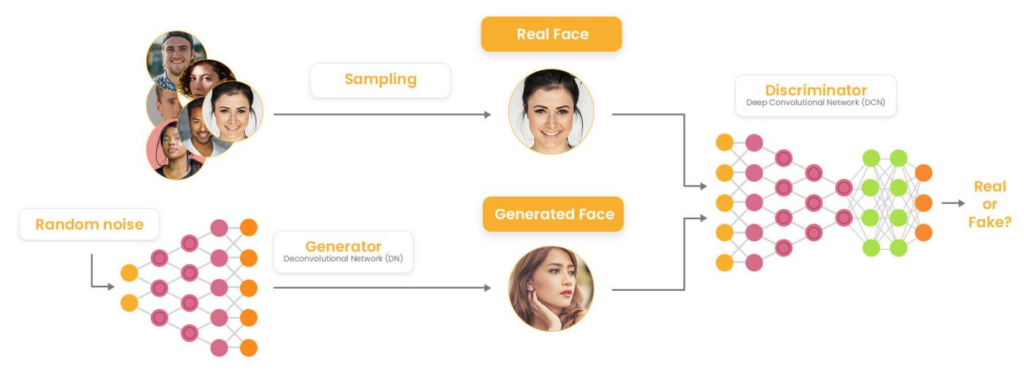

There are several models, all depending on the underlying programming. A good portion of these models are based on GANs: Generative Adversarial Networks.

Depending on the software and utility, there may be more types of models, such as DN. However, to have a simple understanding of AI 2.0, let’s focus on learning the basics of GANs.

Two neural networks are used: a generator and a discriminator.

Both have been trained with real photographs, but each has a specific purpose.

The first, as its name implies, is responsible for generating the images we requested using its training on real images.

But how does it know if they are valid?

Here comes the second neural network of our artificial intelligence model, the discriminator.

The discriminator has been trained with other real images and must determine if the image passes as authentic or not.

In other words, the generator’s neural network creates and refines content repeatedly based on its training and a certain amount of noise until it can deceive the discriminator.

This is a very interesting approach because the training includes strict quality control, which will also always be objective under training standards.

Now that we know the basics of Stable Diffusion, let’s discuss it.

Stable Diffusion is an open-source image generator model. It is completely free, based on community improvements, and has no restrictions.

It was released on August 22, 2022, just over a year ago, and during this time it has gone from creating low-quality images to generating high-value images.

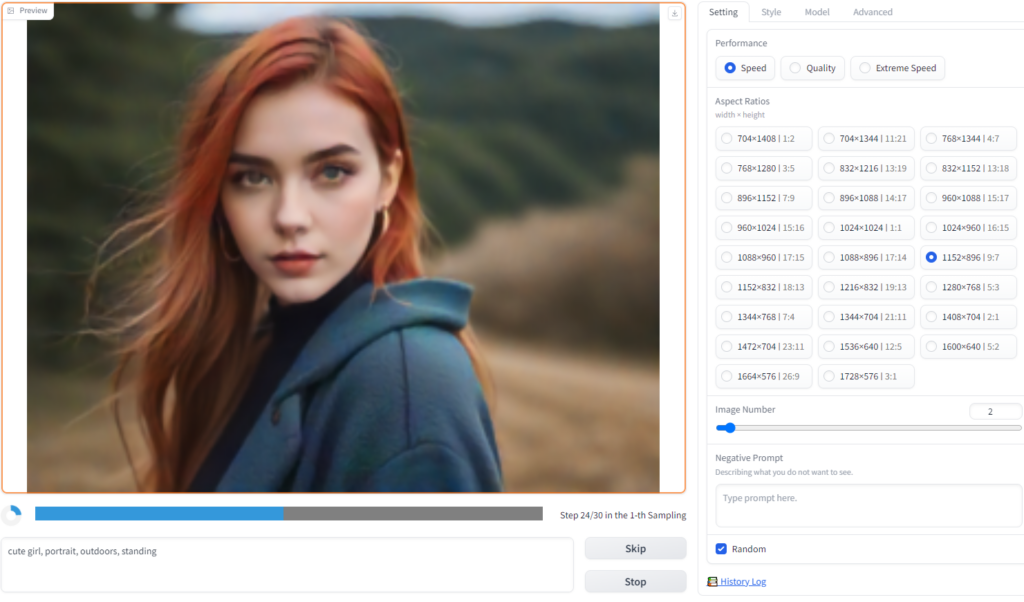

This is a quick example I created using this tool. Although the quality is minimal, if one is not alert, it passes perfectly for real.

How to use Stable Diffusion?

It’s worth noting that you’ll need a good graphics card compatible with this type of task. In my case, I use an Nvidia 1050ti, and it’s barely sufficient for these AI models. It takes between 7 minutes and 30 seconds per image. A high-end graphics card will only take a few seconds.

Although it’s a complex tool, the user community has made it very simple to install.

The first step is to have Python installed on your computer.

Python is the programming language on which this tool is based, as well as everything surrounding it, like the web interface.

Next, you’ll need to choose a framework or method of working.

There are many, but I recommend Fooocus. This software includes everything you need to get started.

From Stable Diffusion itself to generative models and a very user-friendly web interface, which if you’ve visited image generation pages, will be familiar to you.

Once you’ve downloaded Fooocus, simply run it (run.bat) and wait for it to install all the necessary libraries and download all the models and auxiliary support it needs.

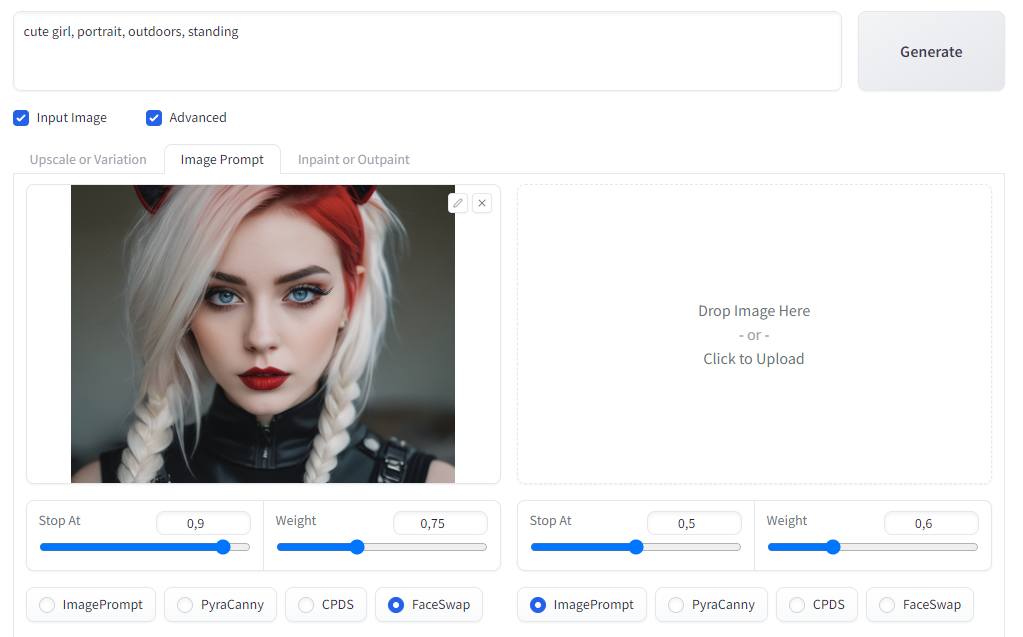

In this screenshot, you can see the process of SD for generating images. If needed, click to enlarge it.

Once the software is executed, we essentially have three sections: Image, Prompt, and Settings.

In the Image section, we can see the generation of our image iteration by iteration.

In the Prompt section, we can shape the content we want to generate. In this case: Cute Girl, Portrait, Outdoors, Standing.

We can be as specific as we want, and we can even add images as prompts, which will give continuity to a specific character, as we’ll see later.

Finally, we have the Settings section: where we can adjust the resolution, quality, type of art to generate, models used, and much more.

For your first use, don’t overcomplicate things. Try a simple prompt and let it run a couple of images. This way, you can familiarize yourself with the software.

Generative models (Checkpoints)

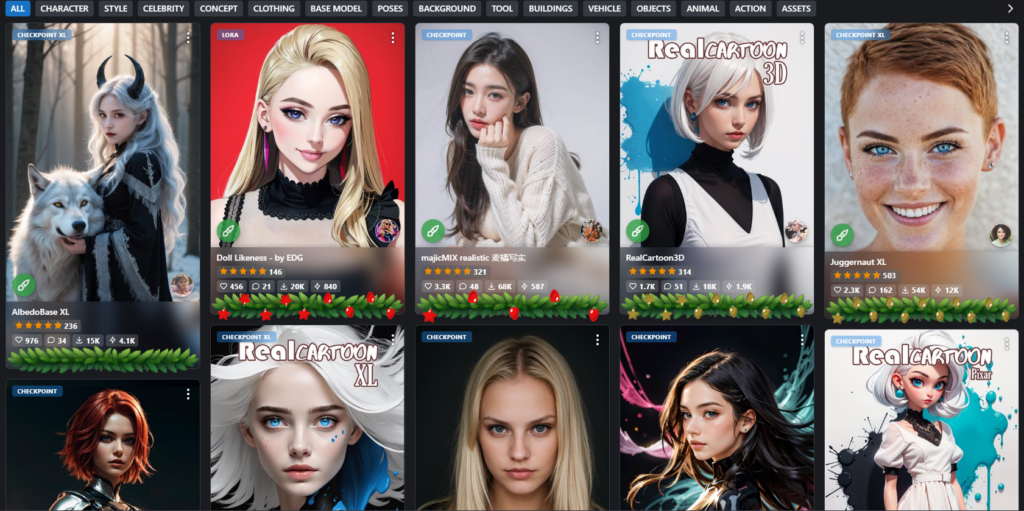

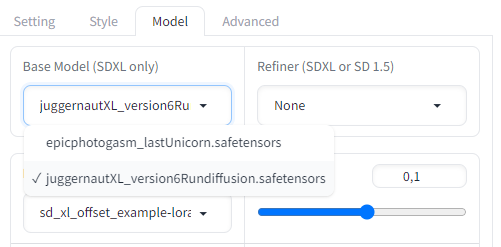

By default, Fooocus will download a generative model (checkpoint) of approximately seven gigabytes called Juggernaut XL.

This model focuses on hyper-realistic photography, which is ideal for portraits and photography uses, such as advertising or creating a fake influencer.

However, you can choose any artistic style in Civitai.

On this website, community users can upload training models created by them with any style, beyond hyper-realism.

You can download whichever model you want and place it inside the subfolder models/checkpoints.

In the configuration section under Models, you can select the one you want:

In my experience, the best one by far is Juggernaut unless you want to create things in an epic or anime style.

Creating your own character

The crux of the matter, and what has created all this interest in AI, is none other than the famous headline: “Virtual Influencer.”

Fooocus offers a unique option within all that exists within Stable Diffusion.

The use of prompts through images, that is: being able to use a previous image.

You can use a model you generate from scratch, or you can use existing photographs.

If you want to generate it from scratch, you need to create a simple prompt of a photographic portrait, a high-quality face photo:

Close up portrait, Irish, 20s, Red hair, gothic, alternative, emo, blue eyes, red lips.

This is the prompt you can use to create a character like this:

You can also use photographs from third parties, as I mentioned before.

All of this is summarized in the Input Image and Advanced sections, located just below the prompts. We will select Image Prompt.

Finally, we’ll look for the last Advanced option, which relates to the Image Prompt itself, allowing us to choose between ImagePrompt, PyraCanny, CPDS, and Faceswap. The latter is the one we’ll be interested in.

When we upload a photo and click on Faceswap underneath it, it automatically takes the face of our created model and adds it to the new prompt.

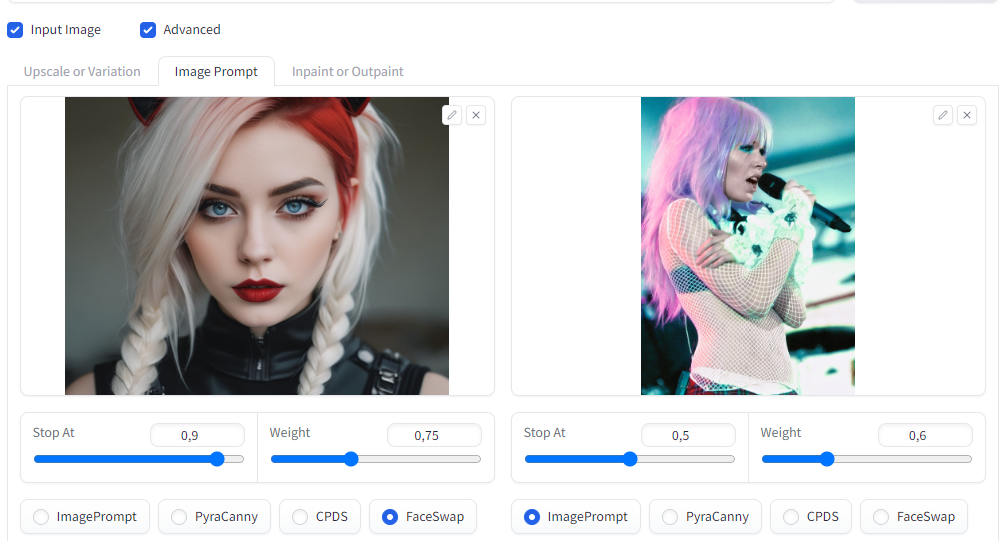

With just this, we can load up to four images in FaceSwap mode and create a unique model.

We can also use our already created model and a text prompt, and even add a second photo as an Image Prompt.

The Image Prompt will take the color and style aspects to apply them to our FaceSwap model.

For example, I want to create a photograph with a more emo and less gothic look, leaning more towards the 2000s era, if you allow me, a time that was aesthetically pleasing.

This is the final result:

Our model has just adopted features from Kerli, such as her hair, without losing her facial characteristics, despite having a different pose.

This can be replicated over and over again to generate continuity. Instead of Kerli, we can take Emilie Autumn:

She retains the same facial characteristics but adopts her hair and subtle makeup details. Don’t ask me about the cat ears, I didn’t give them to her.

Fooocus Styles

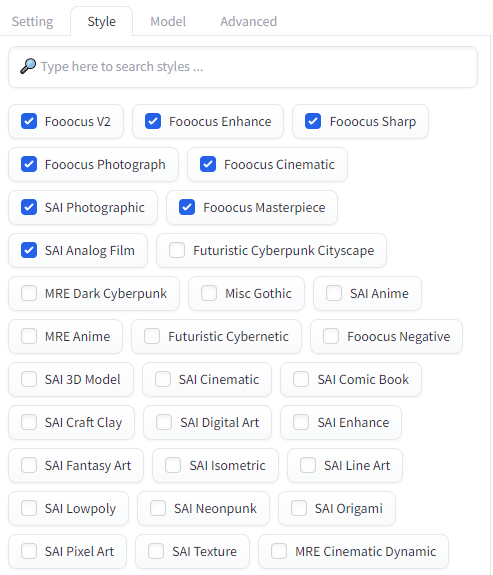

Finally, now that we know how to create our own influencer in about half an hour, we can talk about improving the images.

This is done through the styles offered in the configuration section.

I encourage you to try them all, as the styles are almost as powerful as the prompts themselves.

Analog, cinematic, Gothic, Cybernetic, artistic, comic, fantasy…

We have all kinds of styles that allow us to obtain one type of photograph or another.

To create an image like the ones you’ve seen, I’ve highlighted the options I use. They are focused on professional photography.

Will AI take my job?

Now that you’ve seen the potential of these types of tools, I’d like to share some reflections with you.

These tools are here to stay. Some, like ChatGPT, are paid, yet the barrier to entry is practically non-existent. Others, like SD, as you’ve just seen, are free, open-source, and uncensored.

Thanks to the complacency that pervades the world, rest assured, your job probably isn’t in immediate danger.

Your boss and coworkers are too preoccupied with their day-to-day to want to thrive, make their lives easier, or do things faster.

They’re the kind of people you talk to about the influencer news, and instead of understanding the potential of these tools, they’ll talk to you about the moral harm of erotic content or the latest buzzword: misinformation.

Anything but the most important point: A computer is capable of generating content.

Look, I’m not here to argue or fight with anyone. I don’t care what they say.

The idea behind these tools is that you can use them to your advantage.

Does your job require advertising or graphic design? This tool doesn’t just create beautiful women.

I don’t know if you realize, but all the thumbnails for my articles are made with artificial intelligence.

Do you work in report writing or programming? ChatGPT can perfect that report in seconds, it can review that code function you’ve been looking at for 3 hours, trying to figure out why it’s not working.

“It doesn’t apply to my profession.”

If you work with your hands, you’re partially correct. If your job is based on intellectual property, you’re still in shock.

Let me tell you, if your job is based on industrial property, in other words, on the use of your hands, GPT is still an excellent mentor that can teach you new techniques, validate your ideas, and help you progress.

It’s up to you to step up to the next level and make your life easier, or it will be your sharp coworker who uses AI to their advantage and leaves you behind.